AI can scan a thousand scans in seconds. But it can’t hear a patient say, ‘Doctor, I just don’t feel right.’ That’s where medicine still belongs to humans.

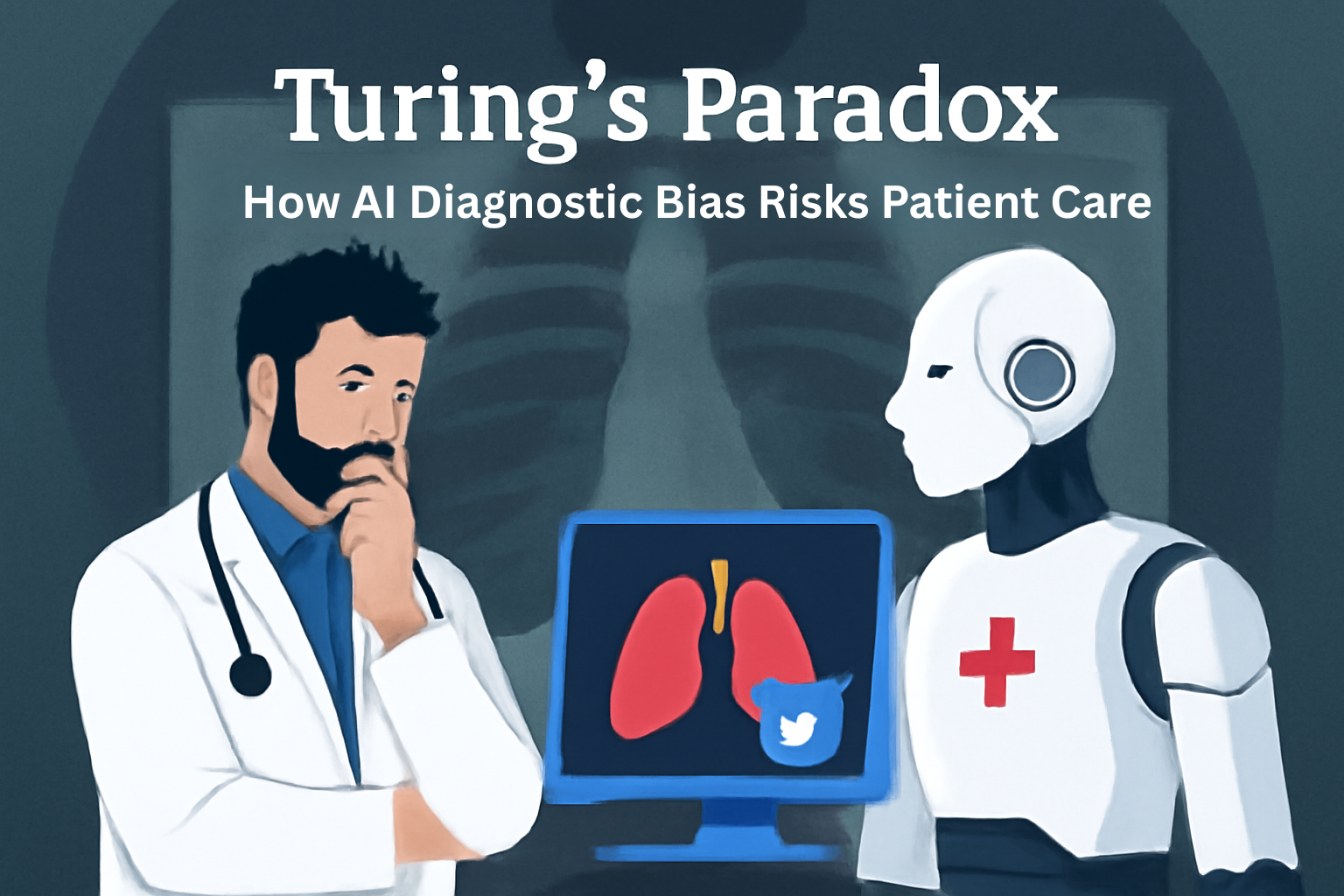

In today’s world, Artificial Intelligence (AI) is becoming an integral part of healthcare—especially in the early detection of diseases. It’s fast, intelligent, and capable of processing vast amounts of data. But even with all that power, something essential is still missing: the human touch.

This blog series explores why AI sometimes fails to detect diseases at early stages. I’ll share real stories where AI missed the mark—stories where doctors, guided by instinct, experience, and empathy, saw what the machine couldn’t. I wrote this to remind us that medicine isn’t just about test results or algorithms. It’s about listening, observing, and noticing the subtle signs that machines often overlook.

Through this series, I hope to spark a thoughtful conversation about the future of AI in healthcare—and why human judgment remains irreplaceable.

Before Detection, There’s Attention

Before diagnosis comes detection.

Before detection comes attention.

Human doctors begin by noticing—especially when things feel unclear or uncertain. Early detection is crucial, but it starts with paying attention to small, often silent signs. This is where AI frequently falls short.

The Promise (and Illusion) of AI in Medicine

AI has made remarkable strides in healthcare. For example,

A 2020 study showed that AI in mammography reduced false positives by 5.7% in the US and 1.2% in the UK, while also cutting false negatives by 9.4% and 2.7%, respectively [1].

A 2025 German study found that AI-supported mammography screening increased breast cancer detection by 17.6% without raising false positives [2].

In critical care, machine learning can predict circulatory failure in ICU patients, enabling earlier intervention, as demonstrated in a 2020 study [3].

AI also predicts in-hospital cardiac arrests 0.5–24 hours in advance in ICU settings, as shown in a 2024 study [4].

Additionally, natural language processing tools now extract insights from clinical notes, improving documentation and analytics, according to a 2020 study [5].

These advancements are exciting, but they don’t tell the whole story.

What Doctors Know That AI Doesn’t

Not long ago, I had dinner with a group of fellow doctors. We were discussing AI in healthcare. Many were optimistic, talking about how AI could make faster, more accurate decisions.

Then I shared a story.

Real Story 1: “I Don’t Feel Fine”

A friend of mine—an IT professional—called me one evening.

“I’m tired all the time. I sleep well, I’m not stressed. But something feels off.”

He had used an AI health app, which suggested tests—thyroid, CBC, B12, D3, blood sugar, liver, and kidney function. All results were normal.

The app concluded:

“Your results are normal. Consider lifestyle changes. If symptoms persist, see a doctor.”

He laughed and said:

“So I’m fine on paper. But I don’t feel fine.”

A few days later, he came to me for a consultation. During our discussion, I recognized that beyond physical symptoms, he was grappling with anxiety, an existential crisis, and fear of job loss.

This was invisible to the AI but evident to a human doctor who listened carefully, asked the right questions, and understood the emotional context.

This is happening more often now. People turn to AI first—because it’s quick and easy—but are left with no answers when something deeper is at play.

Real Story 2: The Missed Diagnosis That Nearly Killed

A well-known cardiologist once shared a powerful case at a conference.

A 28-year-old woman came in with vague chest discomfort—not sharp or crushing, just a strange tightness.

Her AI app said:

“Low risk of heart issues. Possible causes: anxiety, acid reflux, or muscle pain.”

She waited. Then the pain spread to her left arm. That’s when she saw the cardiologist.

He didn’t just hear her words. He noticed her silence, her unease, her body language. He listened—not just with his ears, but with full attention.

He ordered a stress echo.

The result? A rare congenital heart condition—a hidden danger that could have been fatal.

The doctor later said:

“The machine never asked about her intuition. It never saw her face. It never felt her worry. That’s where it failed.”

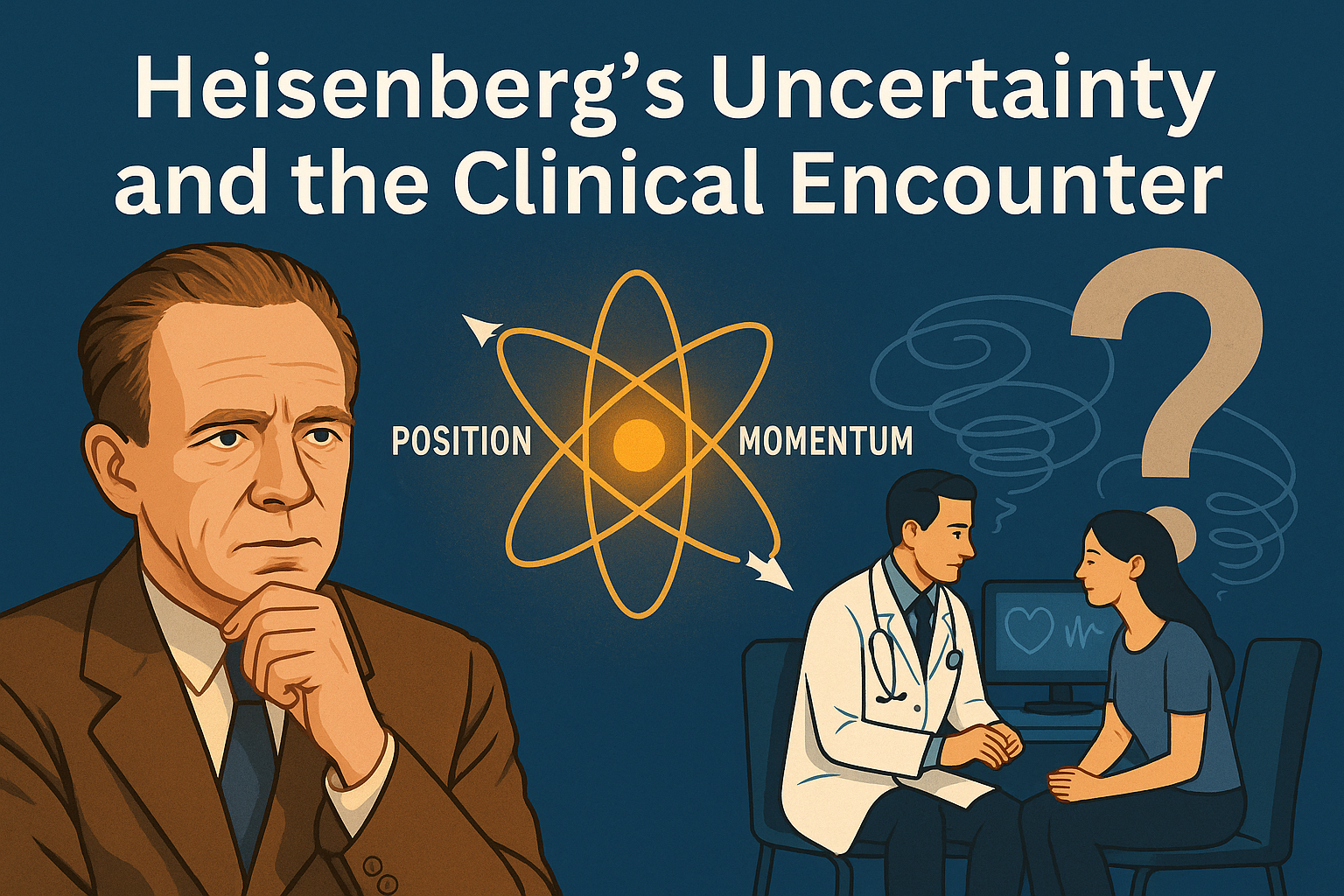

Why Machines Can’t Notice the Human Silence

As a doctor—and as a lifelong learner—I believe medicine is more than science. It’s not just solving equations. It’s about understanding people: their fears, their feelings, and sometimes, what they’re not saying.

AI can analyze patterns. But it doesn’t feel. It doesn’t wonder. It can’t connect emotional dots when something just “doesn’t feel right.”

This isn’t just a technical gap.

It’s a deeply human one.

There’s a vast difference between knowing something and understanding someone.

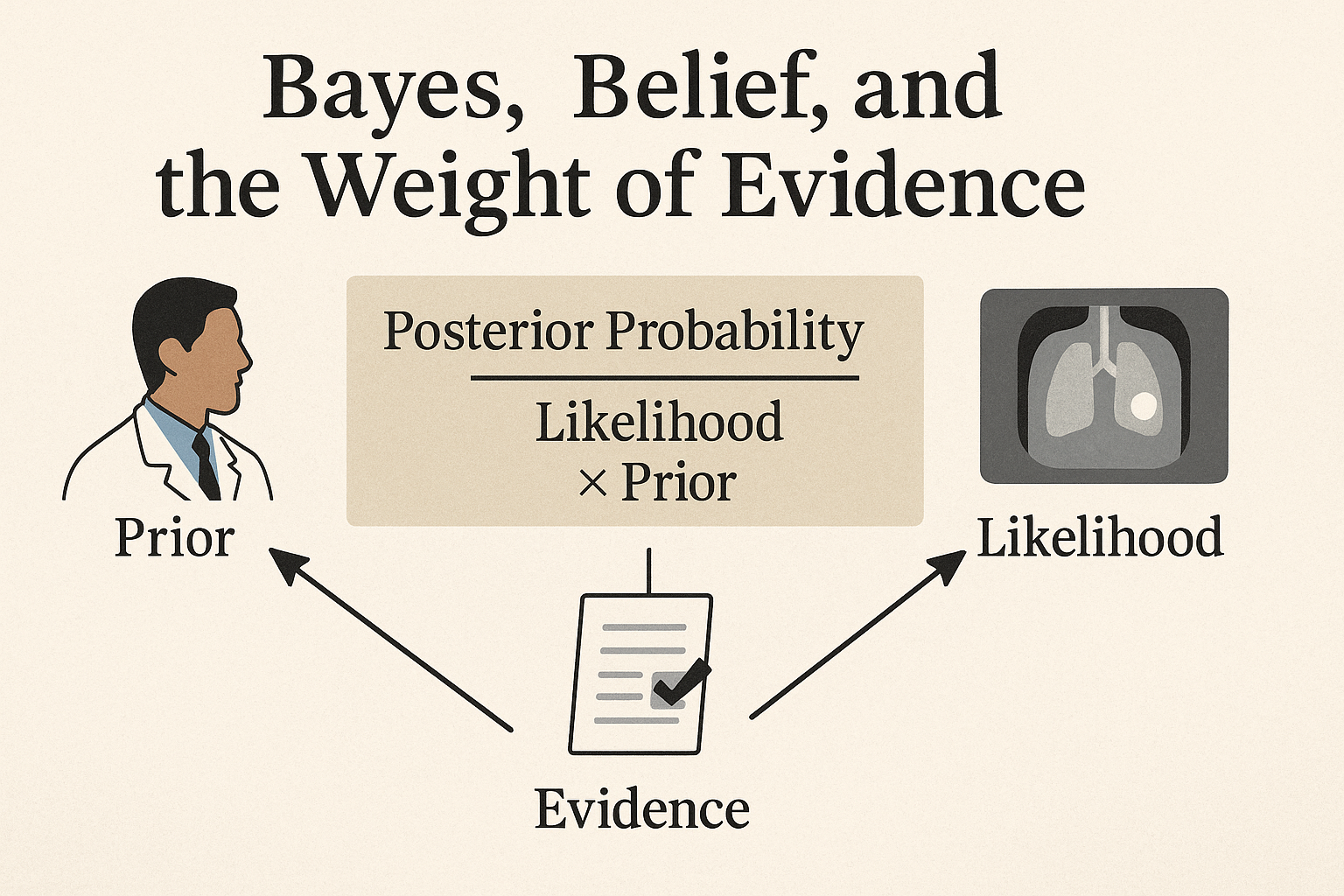

Gödel and the Limits of AI in Medicine

In the next part of this series, we’ll go even deeper.

We’ll explore Gödel’s Incompleteness Theorem—a profound idea from mathematics that states no system, no matter how advanced, can be fully complete.

If logic has limits, how can intelligence—artificial or otherwise—ever be complete?

This will help us understand why AI still can’t replace human reasoning, especially in the nuanced world of medical diagnosis.

In Part 2, we’ll see how Gödel’s Incompleteness Theorem reshapes our understanding of AI in medicine—and why no algorithm can ever capture the full mystery of human health.

Final Thought

Because healing begins where data ends.

A machine can scan the body,

but only a human can sense the soul behind the symptoms.

As we embrace AI in medicine, let’s not forget:

The most powerful diagnostic tool is still human attention.

— Dr. Abhijeet Shinde | The Thinking Healer

References

- McKinney SM, Sieniek M, Godbole V, et al. International evaluation of an AI system for breast cancer screening. Nature. 2020;577(7788):89-94. doi:10.1038/s41586-019-1799-6. PMID:31894144.

- Eisemann N, Bunk S, Mukama T, et al. Nationwide real-world implementation of AI for cancer detection in population-based mammography screening. Nature Medicine. 2025;31(3):917-924. doi:10.1038/s41591-024-03408-6. PMCID:PMC11922743.

- Hyland SL, Faltys M, Hüser M, et al. Machine learning for early prediction of circulatory failure in the intensive care unit. Nature Medicine. 2020;26(3):364-375. doi:10.1038/s41591-020-0789-4.

- Kim YK, Seo WD, Lee SJ, et al. Early prediction of cardiac arrest in the intensive care unit using explainable machine learning: retrospective study. Journal of Medical Internet Research. 2024;26:e62890. doi:10.2196/62890. PMID:39288404.

- Rasmy L, Xiang Y, Xie Z, et al. Med-BERT: pretrained contextualized embeddings on large-scale structured electronic health records for disease prediction. Journal of the American Medical Informatics Association. 2020;28(5):1047-1054. doi:10.1093/jamia/ocz272.

Coming next –

Blog Series 2 Gödel’s Theorem Proves Why AI Medical Diagnosis Will Always Have Fatal Flaws

Leave a Reply