Artificial Intelligence (AI) is transforming the way we work, think, and heal. From medical diagnoses to language generation, machines appear to “think” like humans. But do they really?

The truth is: AI calculates; humans interpret. Minds are not algorithms.

This article explores the deep philosophical and medical divide between machine cognition and human understanding—from syntax vs. semantics to the phenomenology of illness.

The Illusion of Equivalence

It is tempting to view the human brain as an organic computer that stores and processes information. The metaphor feels simple, but simplicity is not truth.

Taking this metaphor too seriously risks collapsing the distinction between information and meaning. The human mind is not merely functional—it is existential. It inhabits a world filled with uncertainties, emotions, and moral weight.

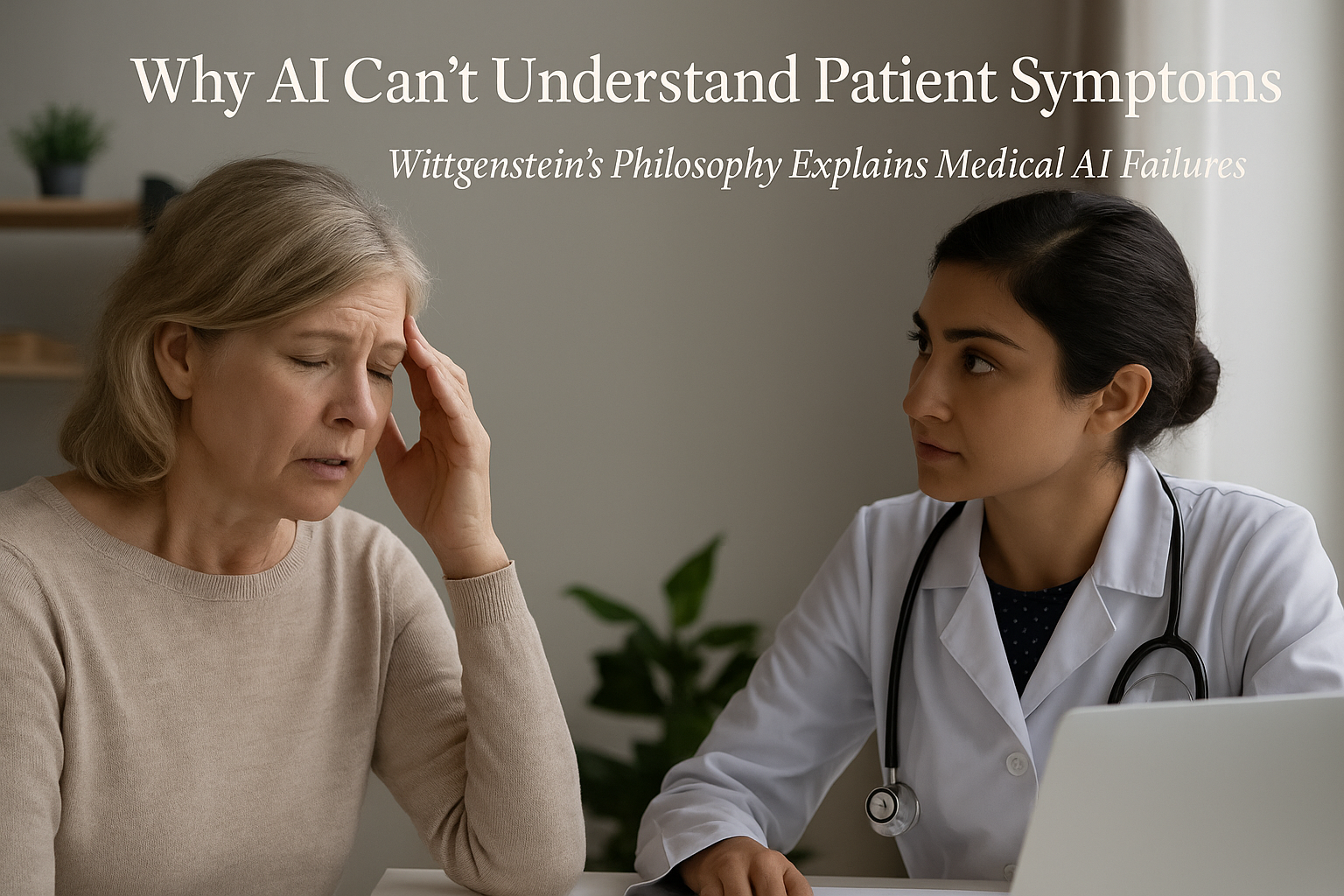

The First Gap: Syntax vs. Semantics

Philosopher John Searle’s Chinese Room Argument illustrates this divide:

- A person can manipulate Chinese symbols without understanding their meaning.

- The output may look intelligent, but there is no comprehension.

This is how AI works—it manipulates syntax (patterns, forms) but lacks semantics (meaning).

Humans do not just match symbols. We live their significance.

The second gap: being in the world

Philosopher Martin Heidegger argued that cognition cannot be detached from our lived reality.

- AI “knows” by correlating patterns.

- Humans know by being immersed in meaning.

For example, a physician does not simply process lab data. They interpret a patient’s story—their struggles, their family’s worry, and the existential weight of illness.

This is more than data processing. It is interpretation in context.

The Third Gap: The Weight of Finitude

The human mind carries the awareness of its own mortality.

- AI does not die.

- AI does not hope or fear.

- AI does not long for meaning.

This existential awareness shapes every human decision. A medical diagnosis to an AI is a classification problem. To a human, it is a turning point in a life’s narrative.

Mary’s Room and the Limits of Machine Knowing

The famous thought experiment Mary’s Room shows that facts are not experiences.

Mary knows everything about color but has never seen it. The moment she sees red, she learns something entirely new.

AI is like Mary in the room:

- It can know all clinical facts.

- But it cannot experience illness.

- It can calculate risk but never feel fear.

This is why machine “knowing” will always lack the lived reality of human experience.

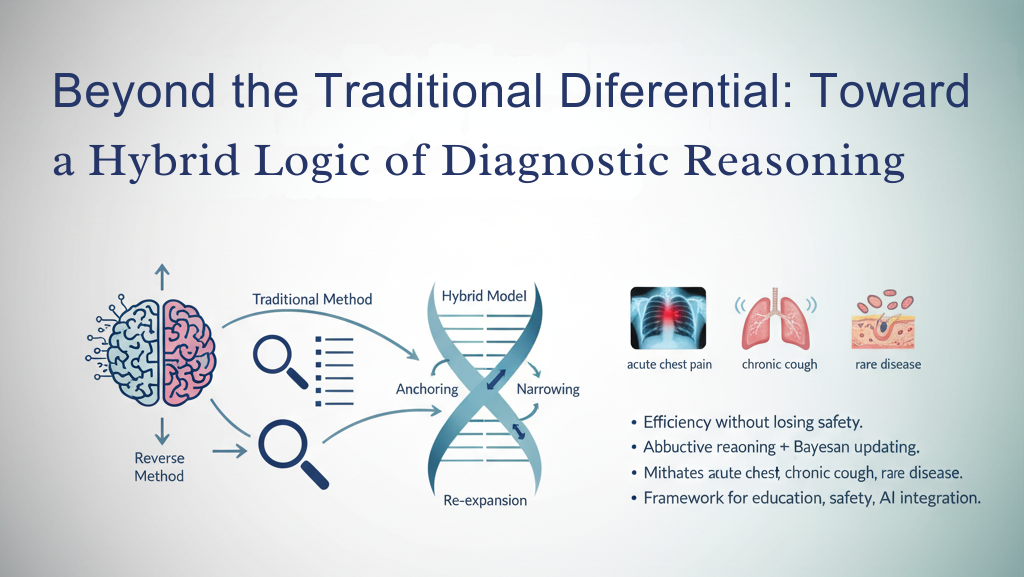

The AI Parallel in Medicine

In healthcare, the difference is profound:

- AI calculates patterns.

- Humans interpret meaning.

A medical diagnosis is more than numbers. It is a story of suffering, hope, and choices. Only a human physician can hold that weight.

Medicine as a Meaning-Making Practice

AI may suggest a treatment with a 94% probability of success.

But the physician must ask: Does this align with the patient’s values, hopes, and dignity?

- The first is calculation.

- The second is wisdom.

This is why medicine is not just a science—it is a meaning-making practice.

Why Minds Are Not Algorithms

- An algorithm is finite, repeatable, and closed.

- A mind is open-ended, shaped by memory, culture, imagination, and morality.

The human mind does not just solve problems. It questions the framing of the problem itself.

That capacity for questioning and reinterpreting meaning cannot be replicated by machines.

The Thinking Healer’s Wisdom

AI may simulate thought, but simulation is not being.

The Thinking Healer must remember:

- Machines can calculate the patterns of life.

- Only humans can bear its weight.

Why Medicine Needs More Than Machines

The future of AI in medicine and philosophy is not about replacing human minds but partnering with them. AI processes data, but humans interpret meaning.

That is why minds are not algorithms—and why the art of healing will always remain profoundly human.

abhijeet

thats great information