—Inspired by Thomas Bayes

“Evidence doesn’t speak for itself. It whispers through the lens of belief.”

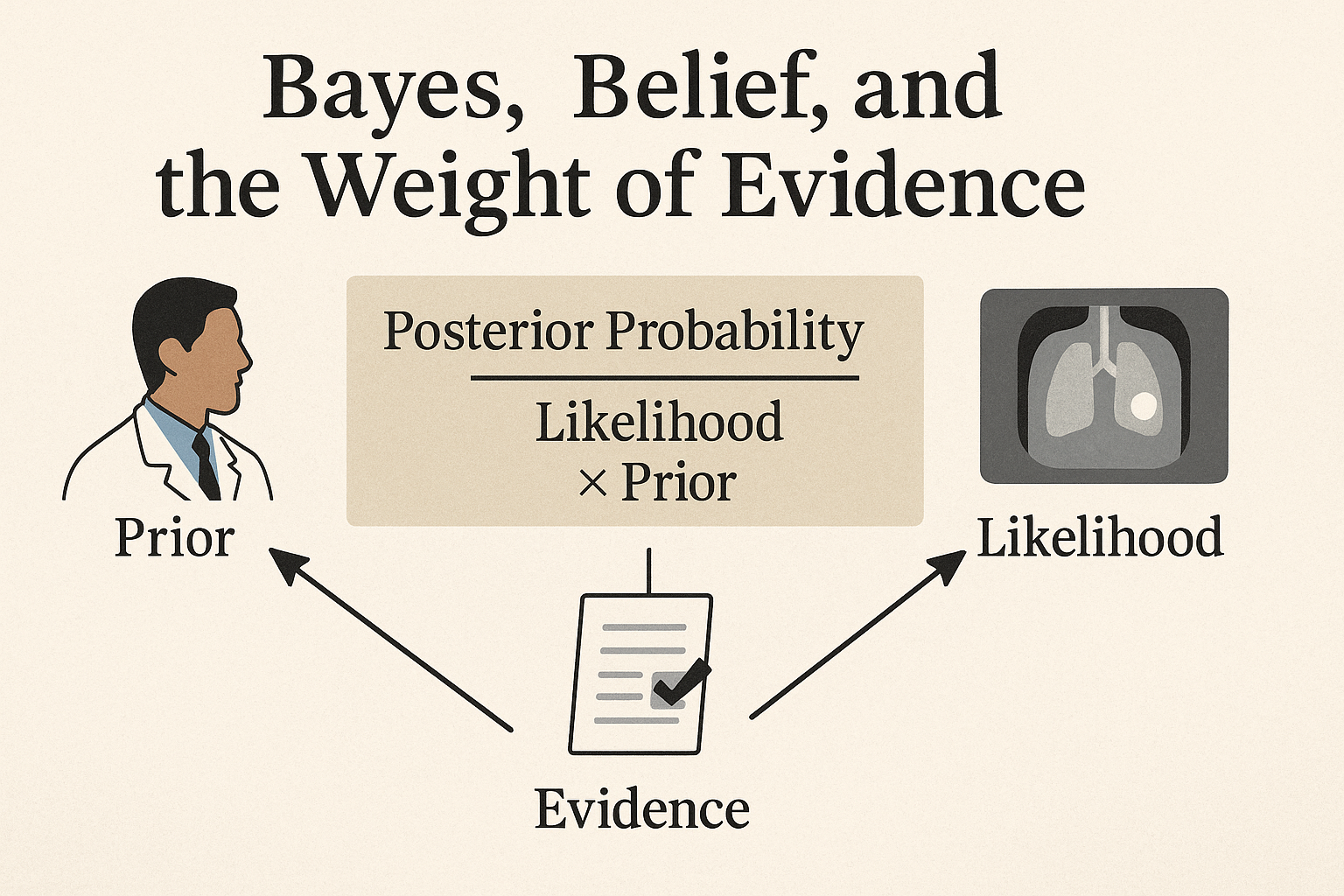

Bayes’ Theorem: What Is It?

At its core, Bayes’ theorem is a mathematical formula for updating beliefs in light of new evidence.

Posterior Probability = (Likelihood × Prior) / Evidence

Put simply:

– What you already believed (prior)

– Plus what the new evidence suggests (likelihood)

– Leads to what you now believe (posterior)

The “Evidence” term ensures probabilities add up, like a scale balancing your updated belief with all possible outcomes.

➡️ Probability of a diagnosis after a test ≠ Probability before the test.

Belief in Medicine: Not Just Faith, But Framing

In clinical practice, Bayesian thinking reframes common situations:

“This test is positive.” → How likely was the disease to begin with?

“CT is normal.” → Does that change our level of concern?

“She looks septic, but all labs are normal.” → Could your prior be stronger than the labs?

👉 Your belief before any test shapes how you interpret the test.

The Illusion of Certainty: When Tests Mislead

Tests don’t give truth. They shift probability.

Example: Negative troponin in a 76-year-old with chest pressure and diabetes. Can you rule out myocardial infarction (MI)? Not yet—because the prior is high.

Let’s break it down with numbers:

65-year-old man with chest pain and a history of smoking.

– Prior probability: 20% (0.2)

– Sensitivity: 90%

– Specificity: 85%

– Posterior probability of MI after a negative test: ~5%

➡️ Bayesian medicine is not yes or no. It is “how much more (or less) likely now?”

The Trap of False Results: Bayes Keeps You Grounded

Tests can trick you.

– False Positives: A positive test in a low-risk patient is often wrong.

– False Negatives: A negative test in a high-risk patient doesn’t rule out disease.

Example: Rapid flu test (70% sensitivity, 90% specificity).

– Positive test raises probability from 30% to ~75%.

– Negative test lowers it to ~15%.

➡️ Bayes reminds you: Don’t trust the test alone. Let the prior and the test’s limits guide you.

AI in Bayesian Medicine: Where Machines Meet Uncertainty (and Sometimes Miss)

AI promises to supercharge Bayesian reasoning—but it isn’t a diagnostic oracle.

Why AI Misses:

– Correlation vs. Causation Trap

– Biased Priors from Flawed Data

– Struggles with Rarity and Routine

– Uncertainty Blind Spots

Example: A 2021 sepsis prediction model missed 67% of cases due to biased data.

➡️ The Thinking Healer’s Take: AI isn’t the enemy of Bayes—it’s a tool. Use it, but overlay clinical judgment.

Thinking Healer as a Bayesian Clinician

The Thinking Healer doesn’t just collect data. They update beliefs in real time.

Clinical examples:

– Vague complaint + red flag → suspicion rises from 5% to 20%.

– Negative test contradicts hunch → reassess from 50% to ~30%.

– Family history late → prior rises from 25% to 60%.

This is living reasoning, not checklist thinking.

Diagnostic Anchoring vs. Probabilistic Fluidity

Bayesian thought is fluid and revisable. But bias fixes us in place:

– Anchoring: “I’ve made my diagnosis.”

– Confirmation: “I’ll just find tests that prove it.”

– Premature Closure: “That explains it. Done.”

➡️ Bayes demands we reopen the case every time new evidence appears.

The Role of Clinical Judgment: Where Art Meets Math

Bayes reminds us: not all beliefs are irrational—some are hard-earned intuitions.

When a senior physician says: “I don’t like this presentation,” that’s a Bayesian prior speaking.

The Thinking Healer:

– Respects evidence

– Honors context, history, and gut sense

– Balances data with diagnostic empathy

Bayesian Medicine Is Not Optional

We practice it every day:

– “I think it’s viral.” → Low prior → No test needed

– “He’s high-risk.” → Moderate prior → Test carefully

– “Despite the negative CT, I still worry.” → Strong prior outweighs weak test

➡️ Bayesianism isn’t just a formula. It’s a philosophy of reasoning.

Reflection: The Belief That Learns

Bayesian thinking teaches the Thinking Healer:

– Beliefs aren’t static—they evolve

– Evidence doesn’t replace intuition—it reshapes it

– Learning medicine = learning how to believe better

– When using AI, ask: Does this tool respect my prior, or impose a biased one?

Try This Today: Pause before interpreting tests or AI scores. Write down your suspicion first and see how evidence adjusts it.

It’s not about being right from the start. It’s about becoming less wrong with every step.

Final Thought

“The test doesn’t give you truth. It gives you weight.” — Thinking Healer Manifesto

References

Ferryman, K., & Pitcan, M. (2018). Fairness in Precision Medicine. Data & Society.

Ferryman, K., & Winn, R. A. (2018, Nov 16). Artificial intelligence can entrench disparities—here’s what we must do. The Cancer Letter.

Goh, E., et al. (2024). Large Language Model Influence on Diagnostic Reasoning: A Randomized Clinical Trial. JAMA Network Open.

Pahud de Mortanges, A., et al. (2024). Orchestrating explainable AI for multimodal and longitudinal datasets in clinical care. npj Digital Medicine.

Topol, E. (2024). Multimodal AI for medicine, simplified. Substack.

Wong, A., Otles, E., & Donnelly, J. P. (2021). External validation of a widely implemented proprietary sepsis prediction model in hospitalized patients. JAMA Internal Medicine, 181(8), 1065–1070.

Disclaimer

This article is for educational and reflective purposes only. It is not a substitute for professional medical advice, diagnosis, or treatment. Clinical examples are simplified for illustration and should not be applied directly to patient care without appropriate medical evaluation and context.

Coming Next-

Blog series 6 why AI can I understand Wittgestein

Leave a Reply